Evaluation: An introductory guide

Dr Neil Raven | Independent consultant (neil.d.raven@gmail.com)

You may have noticed increased talk about evaluation across the academic sector. But what exactly is evaluation, why go to the trouble of evaluating something and how could it be done anyway First, let us tackle the ‘what’ question.

What is evaluation?

Evaluation in higher education has traditionally been viewed in terms of teaching and learning. However, it also has an important role to play in supporting and enhancing the work of professional services. A consideration of what the term means helps to substantiate this claim. Evaluation is concerned with determining the quality and effectiveness of a programme, project or practice, usually against a set of objectives. One succinct summary describes it as the act of “comparing the actual and real with the predicted or promised” (James and Roffe, 2000; cited in Mavin, Lee and Robson, 2010, p4).

Why evaluate?

As the definition indicates, evaluation requires the collection and analysis of information or evidence. It is therefore a purposeful and planned activity. As such, it comes with certain costs that

should be borne in mind when addressing the question of ‘why evaluate’; not least, it requires time and effort to organise and to collect, collate and interpret the data generated (Mavin, Lee and Robson, 2010, p4). One should also be prepared to act on what an evaluation reveals. There are hidden opportunity costs to pay as well; the time and resources dedicated to evaluation that could be spent on something else.

Yet, on the plus side, evaluation makes it possible to determine if objectives have been met. It also has the capability of identifying the strengths and weaknesses of a project or practice, and of

providing guidance as to where improvements can be made and the form they might take. In summary, evaluation offers the prospect of decision making that is based on evidence rather than tradition or established practice, or even gut instinct. Arguably, it also has a particular role to play in the public sector, where considerable emphasis is now placed upon accountability and good governance (Mavin, Lee and Robson, 2010; Hargreaves, 2014; Research Councils UK, 2011).

How to evaluate

If the case has been made, the next and perhaps most fundamental question is how to conduct an evaluation. The first step is to identify what is going to be assessed. An early contribution to the discipline was provided by Kirkpatrick’s model (Dent et al, 2013, pp11-12; Baume, 2008, pp2-3). This suggests that evaluations should aim to capture evidence relating to the:

• Initial reactions of recipients to the activity

• Immediate impact on those engaged

• Medium-term effect on the behaviours and attitudes of those same individuals and

• Longer-term impact regarding the activity’s contribution to wider institutional objectives

To illustrate this hypothesis, let us take an example from the field of widening participation. Summer schools are often deployed to raise awareness of HE and the aspirations of young people

from backgrounds that are traditionally underrepresented at university (those, for instance, from economically disadvantaged areas). An evaluation of such an event would begin by assessing how recipients felt about the experience, before considering its immediate impact on their knowledge and levels of interest. Turning to the medium-term effects, evaluation would seek to determine how far the acquired knowledge and interest had translated into actions back in school. Ultimately, the aim would be to discover whether the summer school contributed to the university’s targets for the number of its new entrants from under-represented backgrounds.

Whilst Kirkpatrick’s model is still widely cited, it has certain limitations as a guide to evaluation (Marvin, Lee and Robson, 2010, p7). It does not consider evidence that would provide insights into how well the project was implemented (sometimes referred to as process evaluation) for

example, how efficiently organised the summer school was (Research Councils UK, 2011, p2; Silver, 2004). Nor is reference made to determining the success of the activity in reaching its target audience. In our example, did the required number of young people participate in the summer school and were they from underrepresented backgrounds? Strictly speaking, this relates to monitoring but it has an important role to play in assessing the success of an activity (Dent et al, 2013, p6; Research Councils UK, 2011, p2).

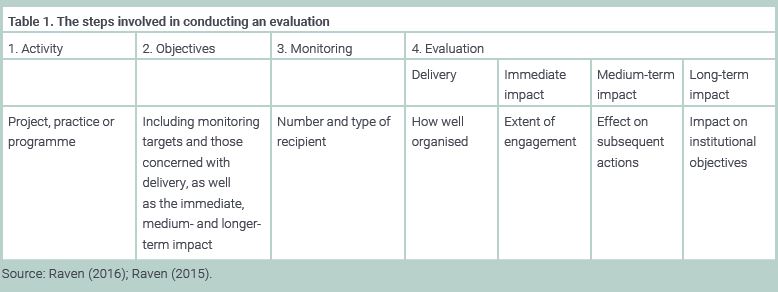

With these observations in mind, Table 1 organises the components of Kirkpatrick’s model into a logic framework, whilst also including the additional stages of monitoring and process evaluation. To offer a step-by-step guide, the framework requires the identification of the activity being evaluated, as well as the objectives it seeks to achieve. These also feature in the table.

Having decided on the activity to be evaluated and what its objectives are in terms of the numbers engaged, the organisation needs to identify how it should be organised and its immediate,

medium- and longer-term impact. In our summer school example, monitoring data might come in the form of a register and insights into the effectiveness of delivery from feedback forms completed by those supporting the event. Meanwhile, post-event questionnaires could reveal what recipients have learnt, and follow-up focus groups held with the same individuals a few weeks to discover any changes in classroom behaviour.

Institutional datasets relating to new HE entrants could be used to ascertain the number of former summer school participants who gained university places and the contribution this event made to overall entry targets for those from under-represented backgrounds.

In conclusion, given the subject, feedback on the framework has been gathered from a range of professional service practitioners. Whilst this has confirmed the framework’s value for me, I am

keen to build on this evidence-base and would welcome readers’comments and thoughts.

References

Baume, D (2008). ‘A toolkit for evaluating educational development ventures’. Educational Developments. 9(4): 1-7. Available at: seda.ac.uk/resources/files/publications_109_Educational%20Dev%209.4.pdf [accessed 24 August 2017].

Dent, P, Garton, L, Hooley, T, Leonard, C, Marriott, J and Moore, N (2013). Higher education outreach to widening participation, toolkits for practitioners. Evaluation. 4. 2nd edition. Bristol: Higher Education Founding Council for England. Available at: heacademy.ac.uk/

resources/resource2322 [accessed 24 August 2017].

Hargreaves, J (2014). ‘Monitoring and evaluation: an insider’s guide to the skills you’ll need’. The Guardian (26 September). Available at: theguardian.com/global-development-professionals-network/2014/sep/26/monitoring-and-evaluation-international-developmentskills[accessed 24 August 2017].

Mavin, S, Lee, L and Robson, F (2010). The evaluation of learning and development in the workplace: A review of the literature. (Higher Education Funding Council for England: Cheltenham). Available at: northumbria.ac.uk/static/5007/hrpdf/hefce/hefce_litreview.pdf [accessed 24 August 2017].

Raven, N (2016). ‘Making evidence work: a framework for monitoring, tracking and evaluating widening participation activity across the

student lifecycle.’ Research in Post-Compulsory Education. 21(4): 360-375.

Raven, N (2015). ‘A framework for outreach evaluation plans.’ Research in Post-Compulsory Education. 20(2): 245-262.

Research Councils UK (2011). Evaluation: practical guidelines. A guide to evaluating public engagement activities. (Research Councils UK: Swindon). Available at: rcuk.ac.uk/documents/publications/evaluationguide-pdf/ [accessed 24 August 2017].

Silver, H (2004). Evaluation Research in Education. Available at: scribd.com/document/142936432/Evaluation-Research-in-Education [accessed 24 August 2017].

0 comments on “Evaluation: An introductory guide”